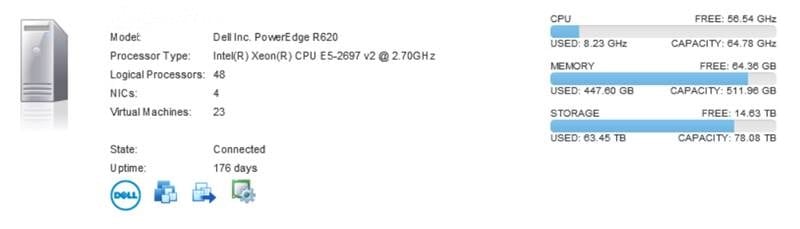

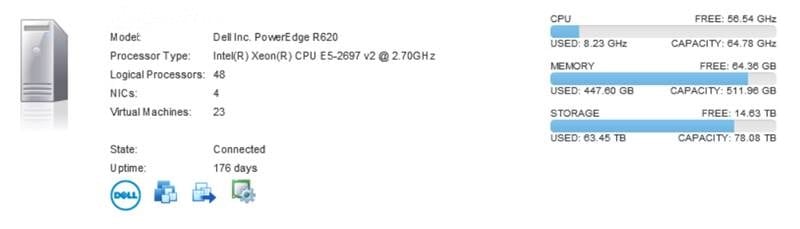

CPU utilization is one of the most important aspects of virtualization. RAM has gotten cheap and plentiful, motherboards have more DIMM slots than ever, and it’s very easy to put several dozen VMs on a single virtualization host. There’s a big problem though: the default metrics about CPU utilization that VMware gives you don’t tell the whole story. Let’s start with the example below, which shows details related to a host’s utilization:

According to this, it appears that we have LOADS of CPU availability, but our memory is maxed out. The memory statement is fairly accurate (assuming the VMs are properly sized), but the CPU statement isn’t necessarily true.

The only thing that the VMware metric shown here is representing is the current active CPU utilization. We can see on the left we have 24 physical cores (excluding hyperthreading) at 2.7 GHz. The only way this metric would show 100 percent CPU usage is if we were actively running 24 fully maxed-out cores of VM usage.

Besides CPU utilization, there is another super important VMware virtualization concept here: CPU co-scheduling. Since a host will normally have more virtual CPUs than physical ones, VMware has to switch out who currently has access to the CPU cores to share them among the workloads.

Practically, what that means is that if a host has an 16-core VM, it has to have 16 physical cores available to run that workload. (Yes, I am skipping a LOT of detail as to how this works. This whitepaper from VMware is an excellent read.) For this 16-core VM to be able to process a workload, 16 other virtual CPUs have to be kicked off of the physical cores to free up room. This process/need creates a state called CPU ready. The CPU is ready to do work, but it can’t because the CPU is currently over-committed and can’t free up room for this workload.

There are two places to see this this metric: ESXtop and through advanced performance monitor. One is given to you as a percentage (ESXtop) and one is given to you as a “ms” time (advanced performance under a VM in vCenter). Realistically, the percentage is what we need, and the general industry rule of thumb is this: if it’s higher than five percent, watch it very closely. ESXtop gives a nice single screen that shows all of the ready times for that host on one screen. In vCenter, you have to look per-VM and in ms instead of percentage. You can convert using some math (or this simple website). Each VM will also have a different ready time. VMs with more CPUs will more easily have a higher CPU ready because they have to wait for more cores to be free.

I’ve logged into environments multiple times to troubleshoot performance problems. The customer explains to me that it couldn’t be CPU because CPU percent/utilization was low in vCenter. This is plausible on the surface, but it’s not enough. A little validation of what is really happening is an important next step. Once looking at the actual VM details, the CPU ready was very high because all of the VMs had a lot of vCPUs. Counter-intuitively, lowering the CPU count of each VM actually increased their performance and overall utilization on the host.

All this to say – a wise system administrator should definitely look at the virtual environment to see its utilization. If you don’t want to spend a lot of time going through every VM to evaluate CPU ready, four vCPU to one physical core (4:1) is a fairly safe ratio; but make sure you accommodate for host redundancy.

However, if your VM’s CPUs are really heavily utilized (always running full loads), you may not be able to go more than 2:1 or 1:1. On the flipside, if your VMs sit around doing nothing most of the time, your ratio may be more like 6:1. Remember, you don’t count hyper-threading in the calculations – only the physical cores.

As always, each environment is very different, and requires its own attention.