When it comes to configuring your virtual environment, it’s very important that you select the right disk types for your needs or you could encounter outages, wasted storage or face lots of time on the back end reconfiguring your settings.

Thin Disk

This type of virtual disk allows you to allocate storage on demand, instead of deciding ahead of time how much space it’s going to take up. This is a good option if you want to control costs and scale out your storage over time. However, you need to pay closer attention to your disk size so you don’t overprovision and overcommit your storage to more than it can hold. Additionally, since it’s allocating on the fly, you might take some performance hits on initial writes that you wouldn’t encounter if you were to utilize one of its thick disk brethren options. This is because as new data space is allocated, the blocks have to first be zeroed to ensure the space is empty before the actual data is written.

You might also encounter some fragmentation on the disk when using this method, which can add to your performance degradation. This varies between storage types and vendors and how your array is set up.

Thin disks are a good option if you’ve got an application that “requires” much more storage than you know it will use or if you’re unable to accurately predict growth.

If you’re curious about how Hyper-V does this, there is a parallel option called dynamically expanding disks.

Thick Lazy Zeroed Disk

If you want to pre-allocate the space for your disk, one option is to make a thick lazy zeroed disk. It won’t be subject to the aforementioned fragmentation problem since it pre-allocates all the space so no other files will get in the middle (which causes fragmentation), and it’s easier to track capacity utilization.

If you’ve got a clear picture of what space you’ll be using, like during migrations, for example, you may want to go with the thick lazy zeroed disk option. Also, if your backend storage has a thin provisioning option, more often than not you’ll want to go with this simply so you don’t have to monitor your space utilization in two locations. Managing thin provisioning and oversubscription on both the back-end SAN and on the front-end virtual disks at the same time can cause a lot of unnecessary headaches, as either or both locations can run out of space.

Thick Eager Zeroed Disk

Generally, we don’t utilize this option unless the vendor requires it. For example, Microsoft clusters and Oracle programs often require this in order to qualify for support.

When provisioning a thick eager zeroed disk, VMware pre-allocates the space and then zeroes it all out ahead of time. In other words, this takes a while — just to increase the net-new write performance of your virtual disk. We don’t frequently see the benefit in this since you only enjoy this perk one time. It doesn’t improve the speed of any of the innumerable subsequent overwrites. (In Hyper-V, by the way, this is called a fixed size disk.)

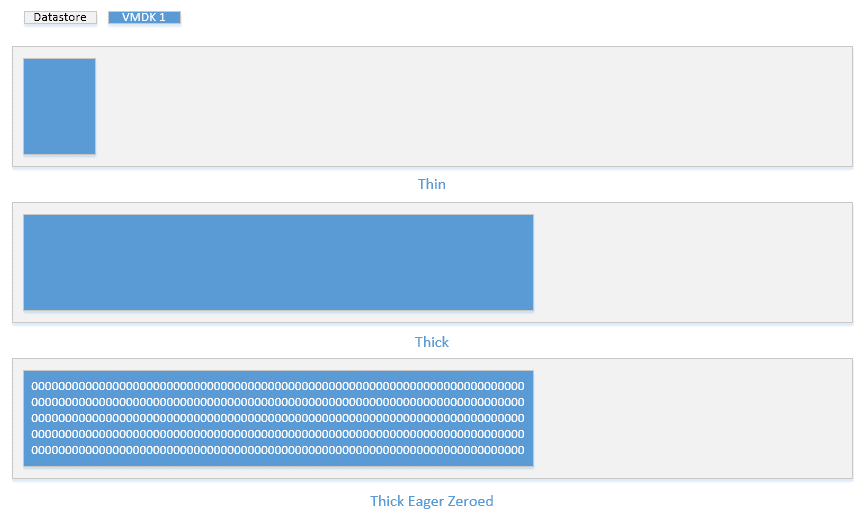

The below diagram shows the difference of these very clearly. If you create the same size VMDK using the three different types, it will look roughly like this on the datastore:

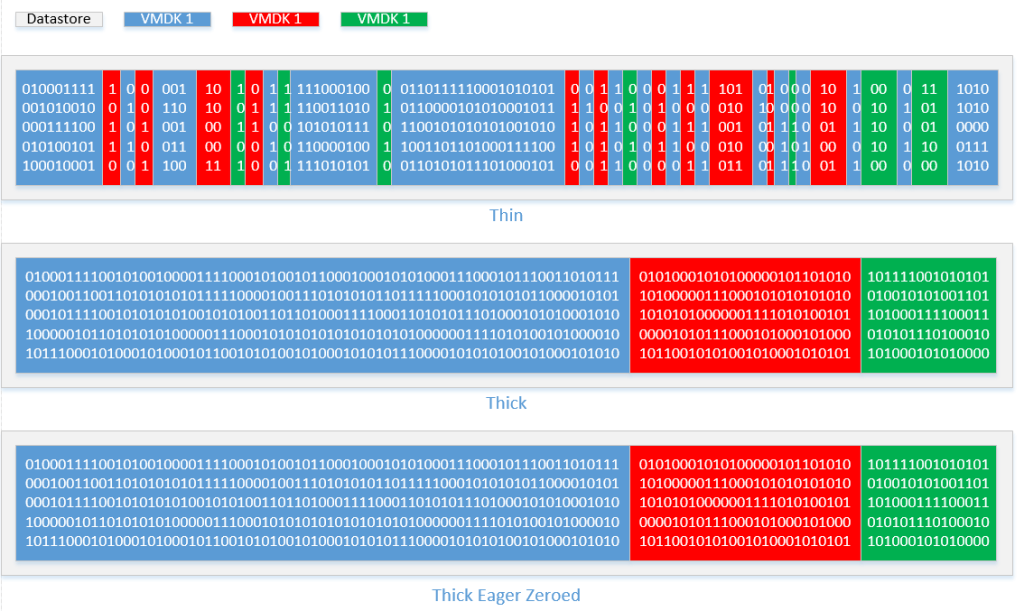

If you then write to the disks over time, and make a couple other disks it will look like this:

Physical Raw Device Mapping (RDM)

A Raw Device Mapping is when an entire volume from the storage array is given directly to a single VM and the VM has full control over its volume. In other words, since the VM can talk directly to the SAN, it can leverage the SAN’s functions that may not be accessible when you use a virtual disk format. You would want to leverage this for clustering, SAN-based snapshots, SAN-based replication or the ability to migrate a disk from a physical server to virtual or vice versa.

There are two types of RDM: physical and virtual. The physical shows the volume exactly as it appears from the storage array with no abstraction layer. Conversely, the virtual puts a “wrapper” around the RDM to make it appear as if it is a virtual disk. The purpose of that is that it allows you to do snapshots and storage vMotions on the volume itself. (Products like Veeam that leverage VMware-based snapshots only work virtual mode.) Typically, we use virtual RDMs to migrate to VMDK since it can be storage vMotioned in order to be turned into a VMDK. If we configure physical RDMs it’s when vendors require it for things like clusters.

It’s common for us to see people create RDMs under the misconception that they’ll enjoy performance gains. In reality, the performance difference between RDM and any of the VMDKs is negligible, and if you don’t need it for a cluster or a vendor requirement, you’re sacrificing flexibility and all the benefits that come along with virtualization. In the early VMware days there was a large performance disparity between RDMs and VMDKs. However, with technological advances, VMware has closed that gap.